I spent the last two months porting the Docker command line interface to Windows with my colleagues on the Microsoft Azure Linux team. Starting with Docker 1.6, a Windows client is shipping with Docker’s official release. This is my first time heavily participating in the development of an open source project and I want to tell you how awesome it was.

We recently announced the first version of “Docker Client for Windows” on Azure Blog and on Docker Blog and this is the making-of story of it!

Now Available: @Docker Client for #Windows. Read more, plus download & install: http://t.co/tuPzh4uRdH

— Microsoft Azure (@Azure) April 16, 2015Background

Docker is now a 2-year old open source project and still has extremely high participation numbers with nearly 1000 contributors who send pull requests every day. It is definitely one of the most popular open source projects going on these days.

After Microsoft’s partnership announcement with Docker, we started to actively work on transforming the Docker ecosystem into a first class experience for people using Windows and Microsoft Azure. Our first task was to port Docker client to Windows. Within two days, we were able to get the Docker client compiling on Windows!

Then began the bug fixes, stabilization and porting the test suite…

Who wants @Dockerfor Windows? Got it cross-compiling tonight!

🐳 cc:@solomonstre #docker pic.twitter.com/bGOn0jmX8m

— Ahmet Alp Balkan ☁︎ (@ahmetalpbalkan) October 28, 2014Work done

In past two months, we have submitted 70 pull requests to fix various problems both in the client code as well as in the test suite. Although many were minor changes, my colleagues and we have fixed bugs and even implemented some non-trivial components like ANSI terminal emulation for Windows in Go.

The Go Advantage

Docker is written in Go –a garbage collected and statically compiled programming language from Google. Docker is most probably the most popular project written in Go. (I think most people learn Go just to contribute to Docker and its ecosystem nowadays[citation needed].)

What makes it easy to port software written in Go is build tags. It is similar to

#ifdef preprocessors, but declared per-file. By using these, we were able to

provide feature implementations for both Windows and Linux very cleanly and

easily.

For instance, a source file named stat_unix.go is used when binary will be

compiled for Unix and stat_windows.go for Windows, providing the

implementation Docker needs to use using Windows APIs. This way, Go forces a

clean solution by avoiding inline compiler preprocessors

Also, the compile-time type checks made us catch bugs that could have gone unnoticed in less type-safe languages. By using build tags, we also wrote platform-specific unit tests which made porting very easy.

Linux-ism(s) in code

Docker is essentially designed to be a “self-sufficient runtime for Linux containers” and therefore developed with tons of “Linux-isms” which closely couples the code and behavior of Docker to Linux system calls and/or the Unix way of doing things.

The first pull request we submitted to Docker was just to get the Docker client compiling on Windows, no more than that. Although most of the functionality just worked because client was talking to the Docker engine (that does the actual magic and heavylifting) over a REST API, there were some Linuxisms and other issues we have fixed over time to release a solid Windows client:

- Path separator problems

- Linux-specific packages indirectly imported by client code

- Unix file properties and permission bits

- Emulating ANSI terminals in Windows

- Designing the Windows installation experience

- Fixing the Integration test suite

Path Problems

Unix file paths are separated with / and Windows paths are separated with \.

Absolute Windows paths also include a drive letter at the beginning like C:\.

This was causing a lot of problems in building Docker images with the Dockerfile

instructions ADD/COPY and Docker commands command-line arguments, like docker cp, to describe volumes, workdirs etc.

Fixing most of these were easy because the erroneous path manipulation was done

with the Go path package, which works with only Unix-style paths, instead

of path/filepath, which works with the paths of the target operating

system the binary is compiled for.

However, this does not entirely fix the problem, because the Unix paths

addressing the Linux container’s filesystem should still use path.

Validating Windows paths from code compiled for Linux/Mac will still be a

challenge because both path and path/filepath packages work with Unix paths

when the binary is compiled for Linux/Mac. Therefore, moving all path validation

logic to the Docker engine (daemon), managed through Docker Remote API,

makes more sense. This is crucial to support deploying Windows Containers from

Linux/Mac clients in the future.

The reason the Docker Mac client was an easier port than the Windows client is mostly the lack of these path problems between Linux and OSX. It also helps that Linux system calls and signals generally have matching Darwin counterparts.

Few other Windows vs Unix distinctions

Windows filesystems like NTFS are case-insensitive whereas almost all Unix filesystems are case-sensitive. Although this did not cause many problems in the product code, we did have to fix or skip some test cases on Windows.

One interesting problem was figuring out the path of the user’s home directory

was: Go’s os/user package provides a method to determine that. However on Mac

(darwin), Go uses cgo to find the home directory, by default. That prevents the

ability to cross-compile the binary that uses the package. Therefore, Docker was

using $HOME environment variable throughout the codebase. For Windows, we had

to change all those with a package that abstracts this out and correctly

handles based on platform: On Windows, the user’s home directory is stored in

%USERPROFILE% environment variable.

Indirectly imported Linux-specific packages

Parts of the client code paths were using some simple utility packages which then directly or indirectly imported packages from libcontainer or docker/daemon-like packages.

This problem made itself obvious by showing compile-time errors. However it is extremely hard to identify importing which package leads the compile time error raised from some indirectly-imported package. Some of those shouldn’t have been imported from the client code path. It was especially hard to diagnose when the dependency chain was like:

- client binary

- imports

x(and 10 other pkgs)- import

y(and 20 other pkgs)- (…many other packages)

- imports

daemon

- imports

- (…many other packages)

- import

- imports

Obviously, daemon package does not compile on Windows (because it is full of

references to Linux-specific syscalls about Linux container runtime). However

indirectly that package got indirectly imported in the client code somehow.

Unfortunately, Go compiler does not show the import chain while building the binary when the compilation fails. So I had to spend countless of hours in import graphs like this and managed to find the full import chain manually.

Most of these packages, thankfully, were indirectly imported for functionality in the utility methods which probably should not have been placed in those packages in the first place. That’s why we took time to reorganize those methods into independent utility packages.

Some Go packages in the Docker codebase were implemented to serve more than one responsibility, which is somewhat understandable for a project developed at this speed (and Go standard library sort of encourages that).

Probably Docker folks didn’t imagine Windows support and Windows Containers coming. That’s why all these problems are just alright at a project moving at this pace.

Unix file permissions and permission bits

Docker makes use of UNIX permission bits, a.k.a chmod bits (read/write/execute on owner/group/other) on images built from Linux/Mac by inheriting their existing permission bits to the image.

There’s not a clear separation of group/others permissions on file attributes in Windows, because they are stored separately on the system with ACL settings and the Go syscall package implements none of these Win32 APIs. Even though Windows has support for file permissions, they cannot be 1:1 mapped to Unix concepts.

In fact, the os.Stat(file).Mode().Perm() function, which is supposed to return

chmod bits, ignorantly returns 0666 (-rw-rw-rw-) on Windows for all files

(and 0777=drwxrwxrwx for all directories). This is severely dangerous for Go

software cross-compiled for Windows that rely on the permission bits for

security.

Since many people use shebang-scripts or binaries as their ENTRYPOINT program on

Docker images, we had to add +x bit to all files built from Windows,

to preserve this behavior on Windows. We can not determine their executability,

simply beczuse there is no concept of “executable files” in Windows as what

it means in POSIX world.

After adding +x to everything and keeping r/w bits on group/others, we had

to add a warning notice to the docker build command on Windows regarding these

low fidelity permission bits. Windows Docker users should be more careful with

the permissions of the various secrets they put inside a container image

packaged from Windows.

Emulating the ANSI Terminal in Windows

UNIX terminal applications can clear the screen, move the cursor, and do all sorts of terminal magic by using the 37-year old ANSI/VT-100 Terminal Control Escape Sequences, a process that involves printing non-printable characters to the terminal.

If a user runs docker run -i ubuntu on their development machines, the Docker

engine sends the terminal output (which has ANSI sequences) to the client and

the client sends its stdin back to the engine.

It’s notoriously hard to do this on Windows because the Windows console (cmd.exe or PowerShell) does not support ANSI codes. Historically, they were supported through ANSI.SYS which was removed from Windows starting with Windows 7.

We could work around this by telling our users to install ansicon, which provides an ANSI.SYS replacement or using ConEmu, which interprets ANSI codes natively and then sends to the Windows console.

However, neither of these are really great and none fully implement the ANSI standard. We wanted to provide a native experience with the Docker client and so we implemented an open-source ANSI emulation package in Go at Docker’s codebase.

Several other colleagues from my team (Sachin Joshi, Brendan Dixon and Gabriel Hartmann) worked on this work. They came up with a console emulator package which reads the ANSI output, parses it, and then emulates the ANSI behavior by making the relevant Win32 syscalls. This was by far the biggest pull request we have sent so far.

It turns out that emulating ANSI is not simple as it looks at first. We are rewriting this code and this might end up in a separate repo, or maybe in Go standard library someday.

Something like this, an ANSI emulator for Windows written in Go, is certainly a reusable library for many Go progams on Windows (including Docker projects like Compose/Machine).

The Installation Experience

If you have tried getting Docker running on your Windows machine, Boot2Docker is your best bet. It boots a small Linux instance on VirtualBox with Docker pre- installed. It provides a great experience for developers using Mac OS X and Windows to get familiar with Docker.

Until now, Boot2Docker was using the boot2docker ssh command to enter a Linux

shell with the docker command in it. Now that we have a docker.exe, we have

changed the experience to start a Windows command-line window with the

environment variables set to point at the Docker engine running inside the VM

and docker.exe in the execution %PATH%. This eliminated the need to SSH into

the VM and running a Linux bash prompt (which is quite a learning curve for

Windows users who have never used Linux before).

We modified the Boot2Docker installer (written in ancient Inno Setup) to bundle

and install docker.exe in %PATH% and fixed the boot2docker shellinit

command output to work with cmd.exe/PowerShell.

We also released a Chocolatey package for Docker CLI (Chocolatey is the unofficial apt-like package manager for Windows). Now that Microsoft has announced PackageManagement (which works well with existing Chocolatey packages) will be shipping with Windows 10, users can install the latest Docker command-line interface with a one line command on Windows (currently not possible on Mac OS X, as you’ll need to install Homebrew first).

You can watch my 90-second screencasts about Installing Boot2Docker and Installing Docker Client on Windows via Chocolatey on YouTube. It is wonderful that we made it quick to start with Docker on Windows. I’m hoping to continue maintaining the Chocolatey/PackageManagement packages going forward.

Fixing the Integration test suite

This was the part that took most of my time despite having the least visible impact to our users.

Docker has a huge integration-cli test suite, which tests the behavior

between the Docker engine and the Docker command-line executable.

Docker CLI uses a REST API called Docker Remote API to manage the Docker

engine (daemon) on a remote machine. The integration-cli test suite provides

most of the test coverage for Docker engine outside of the unit tests within

individual packages.

One could argue that its not the best idea to rely on a CLI-specific test suite to test the entire Remote API/daemon behavior. In my opinion, the CLI and Remote API should be tested separately (there are some tests directly testing the REST API, but they are few and far between).

The main problems with the CLI integration test suite were:

Same host assumption: Many tests assumed they are running on Linux, but also on the same machine running the Docker engine. However we had to set up a remote Docker daemon for our Windows testing (there is no Docker daemon for Windows, yet) and connect to that:

- Many tests were verifying the side-effects of the tested API calls by inspecting the Docker engine host’s filesystem or iptables (because tests were running on the machine with the daemon, they can examine those). Needless to say, this is not the best practice for testing. We had to skip those tests on Windows.

- Some timing-related tests (mainly

docker eventstests) were failing because tests used the clock on the client machine whereas our tests’ docker daemons were running remotely and the clock skew introduced (even though only 1-2 seconds off) was causing all sorts of problems.

More Linux-isms: Many tests used exec /bin/bash -c “this | that | and-that

style of piped executions, some tests hard-coded unix paths like

/var/lib/docker or /tmp/ assuming they will never be executed on Windows. I

was able to fix many of those tests.

Unix and Windows Utilities with the same names: Some tests were calling

programs like mkdir, find, timeout. Problem is, these programs also exist

on Windows but they do not accept the same arguments, breaking expected

behavior.

Having this test suite helped us a great deal in discovering little bugs sneaking into the client code. Some we probably would not have discovered with manual testing.

After over 50 pull requests, we fixed the entire test suite, got it running on Windows, and @jessfraz set up a continuous integration (CI) system for all pull requests sent to Docker to run these tests on Windows, inside VMs hosted on Azure! Windows tests are now a gate for merging pull requests.

🎉 The entire @docker cli test suite is finally green on Windows! This is my past 2 months…

https://t.co/TSp6VR02nb pic.twitter.com/SM48B572cR

— Ahmet Alp Balkan ☁︎ (@ahmetalpbalkan) March 12, 2015That was my reaction to a tiny green .GIF file we saw after one month’s hard work.

When @ahmetalpbalkan is on a #Docker PR spree :-) pic.twitter.com/EN4jvEOb1q

— Arnaud Porterie (@icecrime) February 14, 2015What’s Next for Windows?

My colleagues at Hyper-V team have started to work on making Docker able to

manage Windows Server Containers and Hyper-V Containers! This is some amazing work going

on and you can watch them contributing at os/windows label on GitHub and

participate in the discussions.

Participating in Community

All Docker engineers and core maintainers I interacted with were tremendously helpful and welcoming. They actively reviewed my code and proposals for two months. (It takes immense patience to be a maintainer with code-review responsibilities in a project like Docker which takes more than 100 pull requests in some months plus I am an impatient person).

Almost all of my pull requests were reviewed by at least 2 maintainers and merged within 24 hours –that’s a crazy speed for an open source project as big as Docker. (This must be what they refer to as “living on the bleeding edge.) I am very much obliged to thank every single one of them, they simply rock!

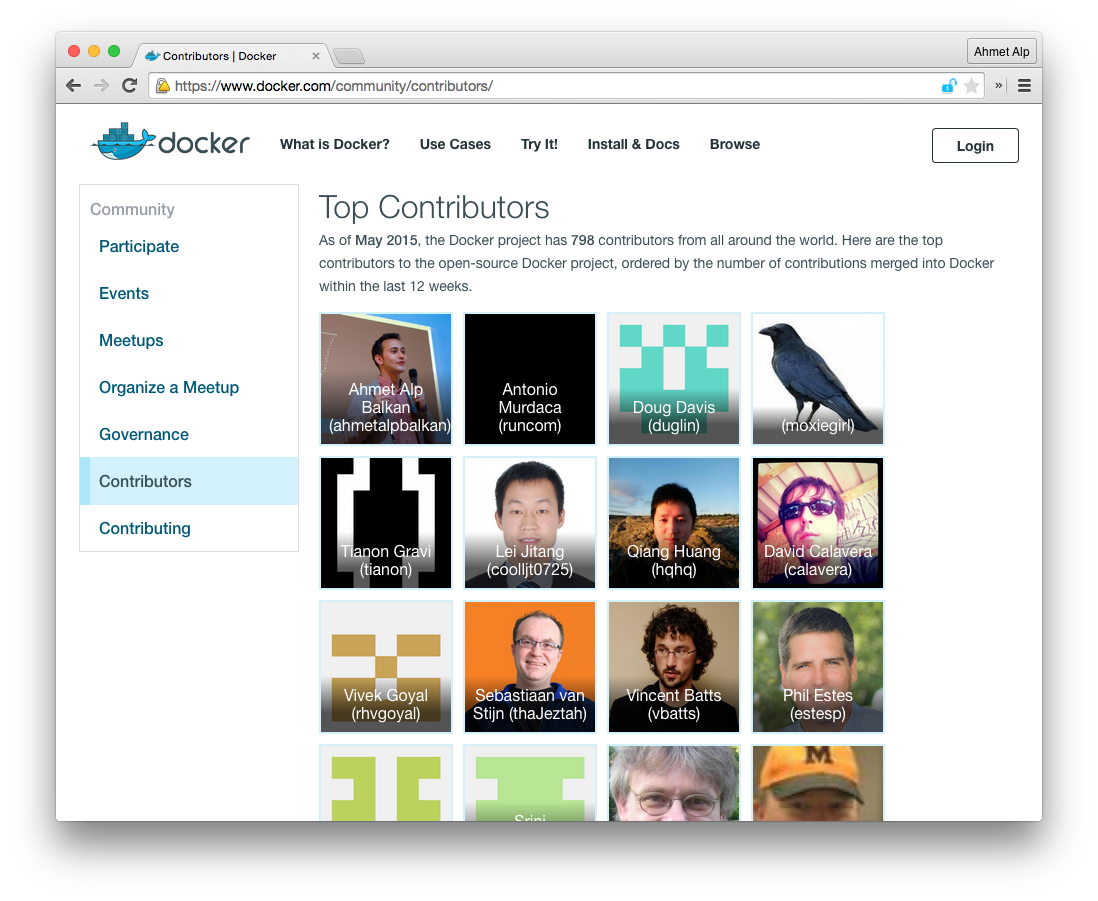

All of my pull requests made me the top non-employee contributor of the past quarter. This is an immense privilege for me and I take pride in my participation:

(Enjoying probably my last days at the Docker top contributors list, at the

time of writing this post.)

(Enjoying probably my last days at the Docker top contributors list, at the

time of writing this post.)

Here's a video of @Docker Client for Windows Building Docker's Dockerfile from Windows. 🐳 pic.twitter.com/3UpOEUlxO6

— Ahmet Alp Balkan ☁︎ (@ahmetalpbalkan) March 13, 2015If you are feeling like contributing to Docker, go ahead and start submitting some pull requests! You will be surprised how much your contributions are welcomed!

(Special thanks to Saruhan Karademir and Snesha Foss for proof-reading drafts of this).

Leave your thoughts